May 5 2022 GM: Difference between revisions

No edit summary |

|||

| Line 33: | Line 33: | ||

== Notes == | == Notes == | ||

'' | Leandro is the Global Partnerships Manager, a new role that was created last year as RDR focus on developing closer ties to the digital rights community worldwide. RDR is a first and foremost a research-focused program, housed at New America Foundation. They focus on studying the 26 most powerful tech companies and holding them accountable for their commitments to protect human rights. | ||

Zak is the Research Manager at Ranking Digital Rights. He worked a lot on the [https://rankingdigitalrights.org/index2022/ 2022 Big Tech Scorecard] they just published. It's an evaluation of how much big tech companies respect digital rights with their policies. It compares 14 digital platforms from around the world using 58 indicators about freedom of expression, privacy, and corporate governance. | |||

You can find them as @leandro and @zak_rogoff_rdr on the IFF Mattermost. | |||

'''How can activists use the data OR how have they used the data to further their own work?''' | |||

* Our entire methodology was designed to be open and transparent from the beginning. We explain all the steps and process in detail, and every time we've made changes to the indicators we have documented the discussion and have held consultations with leading experts. | |||

* All the data from the RDR Index, and now the Big Tech Scorecard can be accessed via [https://rankingdigitalrights.org/index2022/explore-indicators our website]: | |||

You can view the results at different levels: at the category level, at the company level, at specific services, and also the scores for specific indicators and elements. | |||

* We are making efforts to make it easier to use our data, so we're starting to develop a [https://rankingdigitalrights.org/index2022/explore Data Explorer]. It provides different kinds of visualizations, such as year-on-year trends and lenses into specific issues. | |||

* You can also download our entire dataset in an xls or csv file, where you can find the most detailed and granular information, including the specific analysis for each of the more than 300 questions we assess, and the sources we used to evaluate them. | |||

'''What are some trends you picked up in the recent report? Readers Digest of the most important things people should know?''' | |||

* Our work is both a scorecard and a roadmap. The indicators set standards that companies should follow and show where they fall short. If you feel that a company is failing to respect users' rights, you can use our findings to figure out attainable but ambitious specific things to demand it to change. | |||

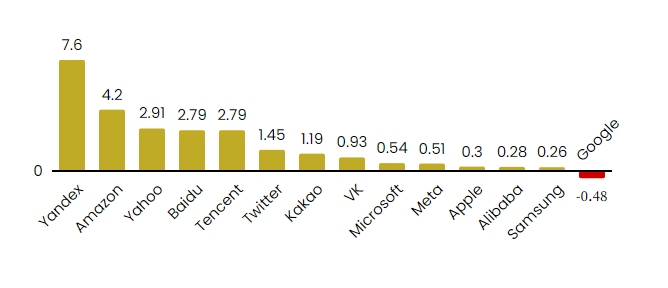

* This shows how the companies we ranked this year changed since the last time we collected data about them in 2020. It shows that Yandex, a Russian company operating under a regime that restricts human rights, actually made the biggest improvement! | |||

[[File:Glitter_Meetup_RDR_May_2022.png]] | |||

'''How do you plan to measure Twitter success/failure for the scorecard after its control falls to Musk? Do you think there will be changes?''' | |||

* Leandro thinks it's too early to tell. If the acquisition does go through, things are going to change pretty quickly. Twitter has been solid in the Freedom of Expression category particularly in the areas that Musk has criticized. | |||

* But RDR will continue to measure it based on any publicly available information, as with all the other companies. It's more important than ever to keep the pressure ongoing. | |||

* Zak adds speculating: if Musk is able to make good on his promise to make the content recommendation algorithm more transparent, that will raise the score. Since our content moderation indicators focus more on transparency, our metrics won't be affected if the content moderation becomes less effective, as long as it's transparent. | |||

'''Can you explain more about the indicators and how you get them? How do you analyze the data?''' | |||

* We use a 7-step research methodology that includes 58 indicators across three categories: governance, privacy, and freedom of expression and information. In total, we evaluate more than 300 questions. The standards we created are grounded in the Universal Declaration of Human Rights and the United Nations Guiding Principles on Business and Human Rights. | |||

* A huge aspect of our research process also involves engaging with the companies. We share preliminary findings for the companies to comment and provide feedback. We then decide what kind of score changes and adjustments may be necessary. | |||

* Here's the [https://rankingdigitalrights.org/methods-and-standards/ page in our website] where you can read more. | |||

'''What was the specific methodologies within the 7-steps?''' | |||

* Here's the overview of how we carry out the research process: | |||

** Step 1: Data collection. A primary research team collects data for each company and provides a preliminary assessment of company performance across all indicators. | |||

** Step 2: Secondary review. A second team of researchers fact-checks the assessment provided by primary researchers in Step 1. | |||

** Step 3: Review and reconciliation. RDR research staff examine the results from Steps 1 and 2 and resolve any differences. | |||

** Step 4: First horizontal review. Research staff cross-check the indicators to ensure they have been evaluated consistently for each company. | |||

** Step 5: Company feedback. Initial results are sent to companies for comment and feedback. All feedback received from companies by our deadline is reviewed by RDR staff, who make decisions about score changes or adjustments. | |||

** Step 6: Second horizontal review. Research staff conduct a second horizontal review, cross-checking the indicators for consistency and quality control. | |||

** Step 7: Final scoring. The RDR team calculates final scores. | |||

'''In the step 5 (Company feedback after your review) of your research process, how do the companies behave? Do they try to fix something, just make it up or ignore it? When you are interacting with employees at these companies, what is their general take of you guys? Is it welcomed? Are you treated like adversaries? is it mxied?''' | |||

* There is definitely variety. Some companies won't even talk to us: the Middle Eastern ones, Chinese ones, and (as of this year) Google. | |||

* At the high-scoring companies and the ones that are rapidly improving, the individuals we talk to are often people who have careers in human rights or transparency. These people agree with our mission and are generally on our side, trying to argue for the same things we want, just internally. | |||

* Despite that, we do have to spend a lot of time fielding questions from the companies that are trying to undercut our methodology, for example saying that the things we want them to do are unnecessary or unreasonable. | |||

'''Have you worked on privacy, transparency and data protection concerns specifically within Google Workspace For Non Profits?''' | |||

* We do evaluate [https://rankingdigitalrights.org/index2022/companies/Google GMail and Google Drive]. We don't look at Google Workspace for Nonprofits as a specific offering though | |||

'''You use 58 indicators about freedom of expression, privacy and corporate governance, and you have 300 questions for assessing. Which question do you consider most important or critical for evaluating companies?''' | |||

* We don't have an official most important one. But when analyzing our data, I usually consider the G4 indicator family to be the most pivotal because it is about human rights risk assessment. | |||

* Here are five indicators in this family: | |||

** https://rankingdigitalrights.org/index2022/indicators/G4a | |||

** https://rankingdigitalrights.org/index2022/indicators/G4b | |||

** https://rankingdigitalrights.org/index2022/indicators/G4c | |||

** https://rankingdigitalrights.org/index2022/indicators/G4d | |||

** https://rankingdigitalrights.org/index2022/indicators/G4e | |||

* If you don't know what the risks of your technology are, it is going to be difficult to prevent harms. Also, these types of risk assessments are rarely required by law, so there is meaningful variation between companies. | |||

* The methodology can be used in many different ways, particularly when doing adaptations (which we encourage!) | |||

* Our methodology has been used in the past to do local and regional studies of companies and services beyond the scope of our own rankings. Here you can find [https://rankingdigitalrights.org/get-involved/ all of those reports]. | |||

* We've been rethinking how we can provide further support to CSOs, and we started to develop materials to lower the barrier of entry to corporate accountability research. | |||

* A good starting point is always developing a strong theory of change, having clear goals that outline what kind of answers you expect to take out from the research. Then selecting indicators based on those goals, no need to do the 58 at once! We can certainly help in that process, I'm always happy to talk and find ways to collaborate | |||

'''How is your engagement with companies in making these evaluations? Do they respond well to your questions/ findings and are they transparent?''' | |||

* For the Big Tech Scorecard, the only Western company that didn't engage with us at all was Google, joining the three Chinese platforms: Tencent, Alibaba, Baidu. | |||

'''This might be a basic question but I'm also curious whether the big tech space in Asia is dominated by Chinese companies?''' | |||

* There's probably someone from Asia who can answer this better than me, but I can say that the big Chinese tech companies are very focused on China itself, but there are definitely Chinese apps that are used a lot in other Asian countries. | |||

* In South Korea and Japan at least, there are big ecosystems of software that come from those countries. | |||

* WeChat by Tencent is a Chinese app that is definitely used a lot outside of China. | |||

Are there any findings that you think are particularly alarming for digital rights activists or that you might anticipate will affect this work in the future? | |||

* This isn't exactly a finding from this year specifically, but to me the most alarming thing is that it's becoming increasingly undeniable that many companies are disclosing policies (which is what we evaluate) and then doing something else entirely. | |||

* That's why the most important ultimate goal of our work is encourage regulation that makes our standards (or something similar) law. | |||

* For example, the Facebook files leaks by whistleblower Frances Haugen show that they just ignore content moderation rules if they are afraid of losing a celebrity user. Not just politicians who are "newsworthy" but just influencers like Ronaldo. | |||

* That's also an area where we want to encourage more interaction and collaboration with the digital rights community. We need to work with activists to point out the company's hypocrisy. | |||

Revision as of 15:12, 5 May 2022

| Glitter Meetups |

Glitter Meetup is the weekly town hall of the Internet Freedom community at the IFF Square on the IFF Mattermost, at 9am EST / 1pm UTC. Do you need an invite? Learn how to get one here.

Date: Thursday, May 5th

Time: 9am EDT / 1pm UTC

Who: Leandro Ucciferri

Where: On IFF Mattermost Square Channel.

- Don't have an account to the IFF Mattermost? you can request one following the directions here.

Using Data from Ranking Digital Rights to Make Your Case

On May 5, Ranking Digital Rights will be available to talk about its 2022 Big Tech Scorecard. The Scorecard evaluates and ranks 14 of the world’s most powerful digital platforms on their commitments to respect users’ fundamental rights, and on the mechanisms they have in place to ensure those promises are kept. We've analyzed hundreds of thousands of data points to come to our conclusions. And all that data is freely available for exploration.

You can use this data in your reports and your activism. RDR will show you how to do so. Leandro Ucciferri will give some tips on how to use RDR's Data Explorer to answer questions you might have. Here's a link to our scorecard and reports. (The 2022 Big Tech Scorecard launches at the end of April).

- Leandro Ucciferri is the Global Partnerships Manager at Ranking Digital Rights. He is a lawyer specializing in technology policy and regulation about the right to privacy, data protection, and cybersecurity. Full bio here.

- Zak Rogoff is a Research Manager at Ranking Digital Rights. He works for an internet that truly serves the interests of everyone, and he believes the human rights framework is the best way to get there. Before joining RDR, Zak organized against internet shutdowns with a global coalition at Access No. He also led campaigns at the Free Software Foundation and worked to rein in government surveillance at U.S.-based Fight for the Future and the Chilean NGO Derechos Digitales.

Notes

Leandro is the Global Partnerships Manager, a new role that was created last year as RDR focus on developing closer ties to the digital rights community worldwide. RDR is a first and foremost a research-focused program, housed at New America Foundation. They focus on studying the 26 most powerful tech companies and holding them accountable for their commitments to protect human rights.

Zak is the Research Manager at Ranking Digital Rights. He worked a lot on the 2022 Big Tech Scorecard they just published. It's an evaluation of how much big tech companies respect digital rights with their policies. It compares 14 digital platforms from around the world using 58 indicators about freedom of expression, privacy, and corporate governance.

You can find them as @leandro and @zak_rogoff_rdr on the IFF Mattermost.

How can activists use the data OR how have they used the data to further their own work?

- Our entire methodology was designed to be open and transparent from the beginning. We explain all the steps and process in detail, and every time we've made changes to the indicators we have documented the discussion and have held consultations with leading experts.

- All the data from the RDR Index, and now the Big Tech Scorecard can be accessed via our website:

You can view the results at different levels: at the category level, at the company level, at specific services, and also the scores for specific indicators and elements.

- We are making efforts to make it easier to use our data, so we're starting to develop a Data Explorer. It provides different kinds of visualizations, such as year-on-year trends and lenses into specific issues.

- You can also download our entire dataset in an xls or csv file, where you can find the most detailed and granular information, including the specific analysis for each of the more than 300 questions we assess, and the sources we used to evaluate them.

What are some trends you picked up in the recent report? Readers Digest of the most important things people should know?

- Our work is both a scorecard and a roadmap. The indicators set standards that companies should follow and show where they fall short. If you feel that a company is failing to respect users' rights, you can use our findings to figure out attainable but ambitious specific things to demand it to change.

- This shows how the companies we ranked this year changed since the last time we collected data about them in 2020. It shows that Yandex, a Russian company operating under a regime that restricts human rights, actually made the biggest improvement!

How do you plan to measure Twitter success/failure for the scorecard after its control falls to Musk? Do you think there will be changes?

- Leandro thinks it's too early to tell. If the acquisition does go through, things are going to change pretty quickly. Twitter has been solid in the Freedom of Expression category particularly in the areas that Musk has criticized.

- But RDR will continue to measure it based on any publicly available information, as with all the other companies. It's more important than ever to keep the pressure ongoing.

- Zak adds speculating: if Musk is able to make good on his promise to make the content recommendation algorithm more transparent, that will raise the score. Since our content moderation indicators focus more on transparency, our metrics won't be affected if the content moderation becomes less effective, as long as it's transparent.

Can you explain more about the indicators and how you get them? How do you analyze the data?

- We use a 7-step research methodology that includes 58 indicators across three categories: governance, privacy, and freedom of expression and information. In total, we evaluate more than 300 questions. The standards we created are grounded in the Universal Declaration of Human Rights and the United Nations Guiding Principles on Business and Human Rights.

- A huge aspect of our research process also involves engaging with the companies. We share preliminary findings for the companies to comment and provide feedback. We then decide what kind of score changes and adjustments may be necessary.

- Here's the page in our website where you can read more.

What was the specific methodologies within the 7-steps?

- Here's the overview of how we carry out the research process:

- Step 1: Data collection. A primary research team collects data for each company and provides a preliminary assessment of company performance across all indicators.

- Step 2: Secondary review. A second team of researchers fact-checks the assessment provided by primary researchers in Step 1.

- Step 3: Review and reconciliation. RDR research staff examine the results from Steps 1 and 2 and resolve any differences.

- Step 4: First horizontal review. Research staff cross-check the indicators to ensure they have been evaluated consistently for each company.

- Step 5: Company feedback. Initial results are sent to companies for comment and feedback. All feedback received from companies by our deadline is reviewed by RDR staff, who make decisions about score changes or adjustments.

- Step 6: Second horizontal review. Research staff conduct a second horizontal review, cross-checking the indicators for consistency and quality control.

- Step 7: Final scoring. The RDR team calculates final scores.

In the step 5 (Company feedback after your review) of your research process, how do the companies behave? Do they try to fix something, just make it up or ignore it? When you are interacting with employees at these companies, what is their general take of you guys? Is it welcomed? Are you treated like adversaries? is it mxied?

- There is definitely variety. Some companies won't even talk to us: the Middle Eastern ones, Chinese ones, and (as of this year) Google.

- At the high-scoring companies and the ones that are rapidly improving, the individuals we talk to are often people who have careers in human rights or transparency. These people agree with our mission and are generally on our side, trying to argue for the same things we want, just internally.

- Despite that, we do have to spend a lot of time fielding questions from the companies that are trying to undercut our methodology, for example saying that the things we want them to do are unnecessary or unreasonable.

Have you worked on privacy, transparency and data protection concerns specifically within Google Workspace For Non Profits?

- We do evaluate GMail and Google Drive. We don't look at Google Workspace for Nonprofits as a specific offering though

You use 58 indicators about freedom of expression, privacy and corporate governance, and you have 300 questions for assessing. Which question do you consider most important or critical for evaluating companies?

- We don't have an official most important one. But when analyzing our data, I usually consider the G4 indicator family to be the most pivotal because it is about human rights risk assessment.

- Here are five indicators in this family:

- If you don't know what the risks of your technology are, it is going to be difficult to prevent harms. Also, these types of risk assessments are rarely required by law, so there is meaningful variation between companies.

- The methodology can be used in many different ways, particularly when doing adaptations (which we encourage!)

- Our methodology has been used in the past to do local and regional studies of companies and services beyond the scope of our own rankings. Here you can find all of those reports.

- We've been rethinking how we can provide further support to CSOs, and we started to develop materials to lower the barrier of entry to corporate accountability research.

- A good starting point is always developing a strong theory of change, having clear goals that outline what kind of answers you expect to take out from the research. Then selecting indicators based on those goals, no need to do the 58 at once! We can certainly help in that process, I'm always happy to talk and find ways to collaborate

How is your engagement with companies in making these evaluations? Do they respond well to your questions/ findings and are they transparent?

- For the Big Tech Scorecard, the only Western company that didn't engage with us at all was Google, joining the three Chinese platforms: Tencent, Alibaba, Baidu.

This might be a basic question but I'm also curious whether the big tech space in Asia is dominated by Chinese companies?

- There's probably someone from Asia who can answer this better than me, but I can say that the big Chinese tech companies are very focused on China itself, but there are definitely Chinese apps that are used a lot in other Asian countries.

- In South Korea and Japan at least, there are big ecosystems of software that come from those countries.

- WeChat by Tencent is a Chinese app that is definitely used a lot outside of China.

Are there any findings that you think are particularly alarming for digital rights activists or that you might anticipate will affect this work in the future?

- This isn't exactly a finding from this year specifically, but to me the most alarming thing is that it's becoming increasingly undeniable that many companies are disclosing policies (which is what we evaluate) and then doing something else entirely.

- That's why the most important ultimate goal of our work is encourage regulation that makes our standards (or something similar) law.

- For example, the Facebook files leaks by whistleblower Frances Haugen show that they just ignore content moderation rules if they are afraid of losing a celebrity user. Not just politicians who are "newsworthy" but just influencers like Ronaldo.

- That's also an area where we want to encourage more interaction and collaboration with the digital rights community. We need to work with activists to point out the company's hypocrisy.